This is Synthetic Mind, your 5-minute A.I newsletter that’s like the A.I version of the Fresh Prince of Bel-Air….We’re fresh, fun, and full of style.

It’s Wednesday. Let’s roll.

🔌 ChatGPT’s New Update Changes Everything…

💥 FAKE A.I Image Causes REAL Market Drop

🤖 OpenAI Plans For Superintelligence

🐟 Byte Of The Day

🧠 Mind Memes

Read Time: 5 min

🔌 ChatGPT’s New Update Changes Everything…

Bard is like the little brother that can’t get out of his older brother’s shadow…

No matter how hard he tries, ChatGPT keeps stealing his spotlight.

Despite Bard’s rough start, he’s been turning heads with many new updates earlier this month…

Then OpenAI dropped this update:

ChatGPT can now access the internet via Bing plugins

Until now ChatGPT used data from 2021 - Meaning it fell behind Bard who gained internet access a while ago.

But with the new update, ChatGPT is all anyone can talk about.

Poor Bard.

As of now, only ChatGPT Plus users have access to the plugin.

Here’s how to access it:

In the next few weeks, ALL ChatGPT users will have access.

With this, OpenAI has leveled the playing field yet again.

Alright, Bard….

Your move.

🤝 Together With Masterworks

The first AI-powered startup unlocking the “billionaire economy” for your benefit

Art is one of the oldest markets in the world, yet most don’t know there’s big money in it.

Honestly, it’s the best-kept investing secret around.

But after a Harvard data scientist and his team cracked the code with a system to identify “excess alpha.”...

People are raking it in.

Masterworks, the company that’s made it all possible, helps investors like you invest in blue-chip art for a fraction of the cost.

Their proprietary database of art market returns provides an unrivaled quantitative edge in analyzing investment opportunities.

So far, it's been right on the money.

Every one of their 13 exits has been profitable, with recent exits delivering +10.4%, +13.9%, and +35.0% net annualized returns.

Want to check it out? Skip the waitlist with our exclusive referral link.

See important disclosures at masterworks.com/cd

💥 Fake A.I Image Causes REAL Market Drop

Fake news is nothing new - Especially when A.I is involved

But I must say this one takes the cake.

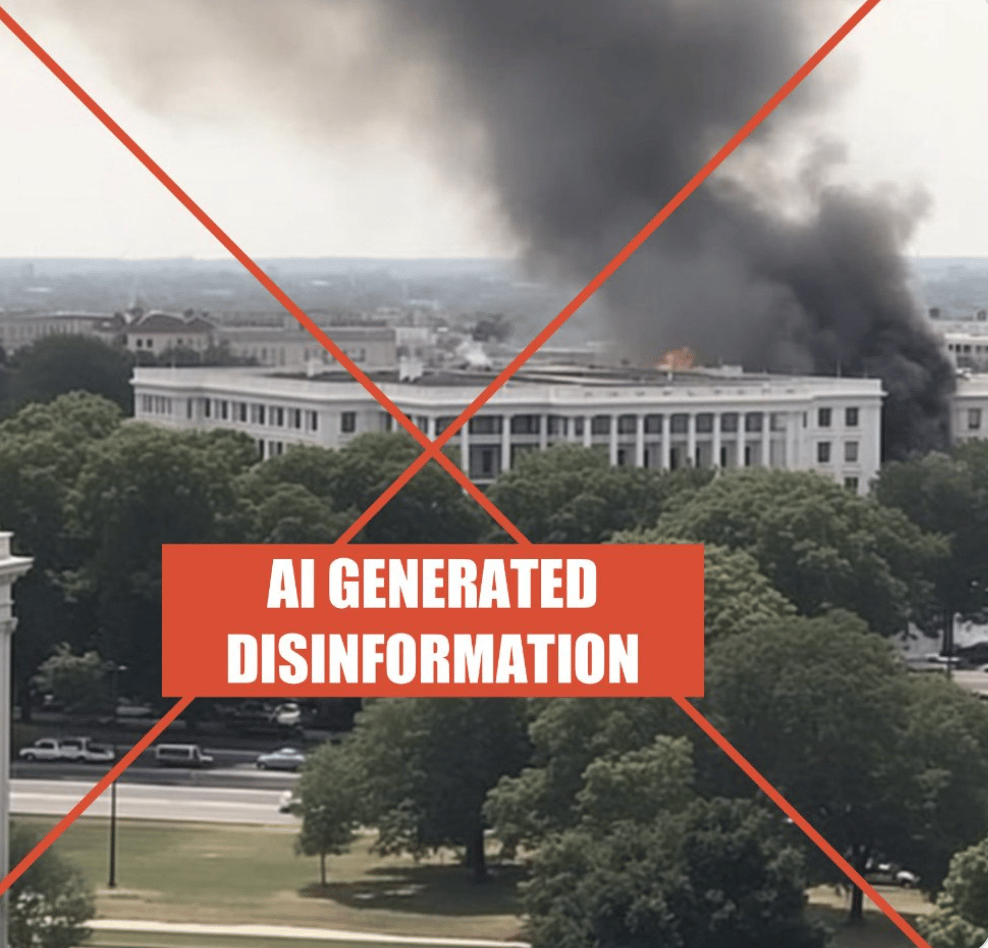

An A.I-generated image of an explosion at the Pentagon went VIRAL earlier this week.

So viral that many foreign news outlets were reporting it as fact.

Here’s the image (minus the red tape of course)

Naturally, this caused a lot of panic.

The result?

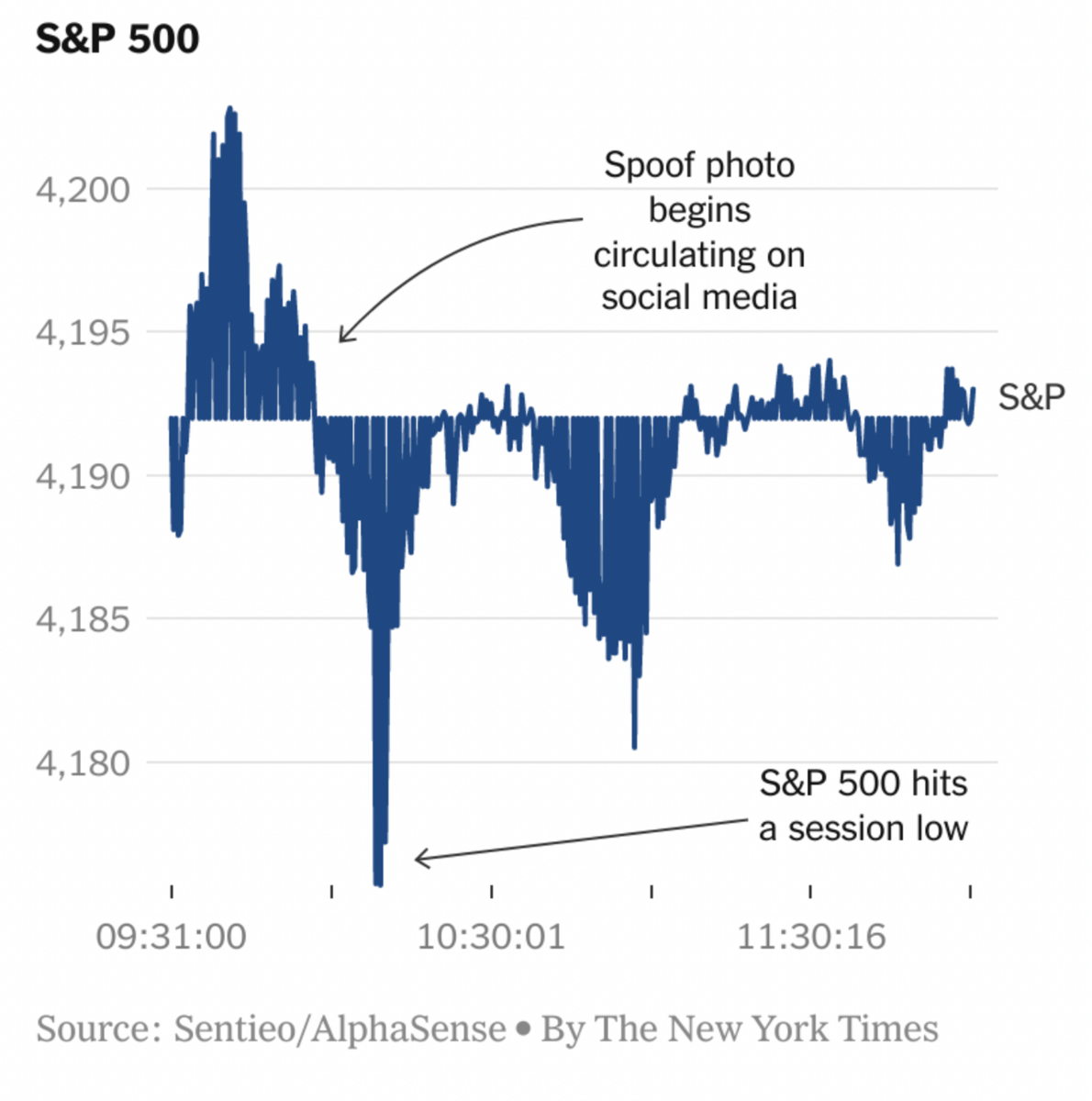

The S&P 500 stock dropped about 80 points

Yikes.

The photo was first posted on a verified Twitter account that looked like Bloomberg (a trusted news site).

Since the Twitter account had a blue check, everyone believed it was true.

Honestly, I can’t blame them. The blue check screams “Trust me, I’m real!”

But that’s a whole other issue.

Here’s what I’m focused on - Fake news is a MAJOR issue, and with A.I it’s getting worse.

Especially as new editing tools like ‘Drag Your Gan’ are released on the daily:

With tools like this, everyone and their grandma can edit any photo and make it look real.

So now, for the first time ever….

Seeing is NOT believing.

🤖 OpenAI Plans For Superintelligence

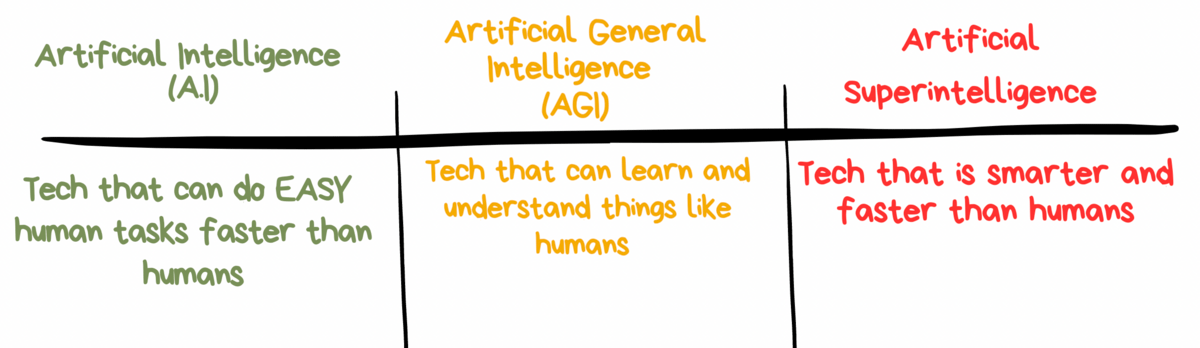

You’ve heard of AGI…

But have you heard of ‘Artificial SuperIntelligence’?

If not, let me break it down for you:

We still have yet to see AGI, so very few thoughts have been given to superintelligence…

Yet, OpenAI thinks we will see it all in the next 10 years.

In other words, by 2033 we will be living in a world where the bots are more powerful than humans.

Once OpenAI realized this, it seems they had a ‘Well dang. We really should do something about this’ moment.

What did they do?

OpenAI released an article called ‘The Governance of Superintelligence‘

Don’t read it. It’s long and full of tech words.

Here’s what to know:

A.I developers need to talk and come to a safety agreement. We can’t stop superintelligence. All we can do is manage it. Meaning big developers need to have a good long talk about what’s ok and what’s not.

We need international regulation. OpenAI suggests an organization like IAEA that oversees A.I developments. However, they still want countries to be able to make their own decisions regarding censorship.

Involve the public in safety decisions. I’m glad someone said it…

Have the Do’s and Do Not's. They don’t think EVERY A.I tool should be tested. Instead, they want to outline what developers can and can’t do. My question is who gets to build the list?

In a nutshell, OpenAI sees artificial superintelligence as a GOOD thing for society - As long as we learn to manage it and keep ourselves safe.

Now don’t get me wrong, A.I regulation is important…

But many can’t shake the feeling that OpenAI is trying to rip the rug out from under their competition.

What do you think?

Place your vote HERE

🐟 Byte Of The Day:

🧠 Mind Memes

Special Thanks To This Reader: